Table of Contents

The Seat-to-Outcome Activation Trap

AI is breaking classic SaaS economics.

Intercom’s Fin AI Agent became a nine‑figure ARR product by charging per “Resolution,” not per seat. That shift is becoming common in SaaS because AI model costs scale with usage.

But most teams are only changing pricing, not activation.

They swap “per seat” for “per resolution” and keep the same old PLG motions. A free trial, a feature tour, and an invite-your-team prompt.

Seat-based PLG optimizes for “a user took an action,” while outcome-based PLG must prove “the AI did the job correctly, every time.” Until self-serve onboarding is rebuilt around that outcome, the new pricing change won’t make sense to the customer.

Seat vs. Outcome Activation: What Really Changes

In seat-based SaaS, activation is personal and fast. A user signs up, clicks around, creates something, and invites a teammate. The aha moment is the single player mode, "I can see how this helps me", and it takes minutes to create something (a doc, project, dashboard). They invite teammates, then add more seats and switch to multiplayer mode.

Read more on Seat-based Pricing here.

With outcome-based AI products like Intercom Fin, the buyer is usually an admin, and their question changes to “Will this work reliably with my content and use cases? How often will it fail?” Their risk is that it’s bad AI in front of customers, a bill that charges, and they didn't get the value (or the outcome they were expecting).

Aspect | Seat-Based | Outcome-Based (Fin-style) |

Aha moment | User action completed | Verified AI outcome delivered |

Primary buyer | End-user (personal value) | Admin (workflow ROI) |

Setup required | Minutes (create, invite, explore) | Hours (knowledge, routing, guardrails) |

Pricing risk | Fixed, predictable | Variable, tied to AI performance |

Trust driver | Familiar UX, peer adoption | Accuracy ratings, visible fix loops |

Failure mode | Low engagement → quiet churn | One wrong answer → permanent distrust |

Seat-based PLG is about helping people do more. Outcome-based PLG is about getting systems do work autonomously. Also proving that those systems are safe, accurate, and worth paying for.

In outcome-based AI, a bad experience, especially one thats billed for, can forever damage trust in the product, company brand, and the pricing model at the same time.

Self-serve onboarding for an outcome-based product has no margin for error.

That means the self-serve journey must be designed for an admin configuring a workflow, not an end-user exploring features.

Why CAC Increases, and Why PLG Is the Fix

When companies move to outcome-based pricing without fixing activation, the CAC increases.

Without the sales playbook, sales reps revert to selling what they know, which has been proven with seat-based models. Now, they are selling something called “Resolution”, which could be a moving target, which is difficult to forecast.

As a result, the sales-led POC sales cycle, which previously had established playbooks and lasted 30 days, is now extended to 60 days. Without the proper playbook, deals get stalled as everyone negotiates and tries to figure out what a Resolution or Resolve ticket even means. Buyers need more education and want proof before committing.

Seat-based buyers easily understand, "I just buy seats. How many people on my team need to use this?" However, outcome-based buyers need verification: "Show me that this works." This leads to a very expensive, back-and-forth process. There's also uncertainty about LTV, so marketing spend remains inconclusive.

The big structural fix would be to build in self-serve activation, the PLG experience, by creating a free trial or POC that lets customers connect to their own knowledge base and content, test the AI, and validate real resolution before signing or buying. Because LTV can be uncertain, SaaS companies will hesitate to spend thousands of dollars on a sales-run proof of concept. PLG creates an opportunity for the product to demonstrate its value. The PLG experience becomes a requirement and makes the outcome-based pricing model operate more smoothly from an operational standpoint.

The Activation Timeline: From Signup to First Paid Resolution

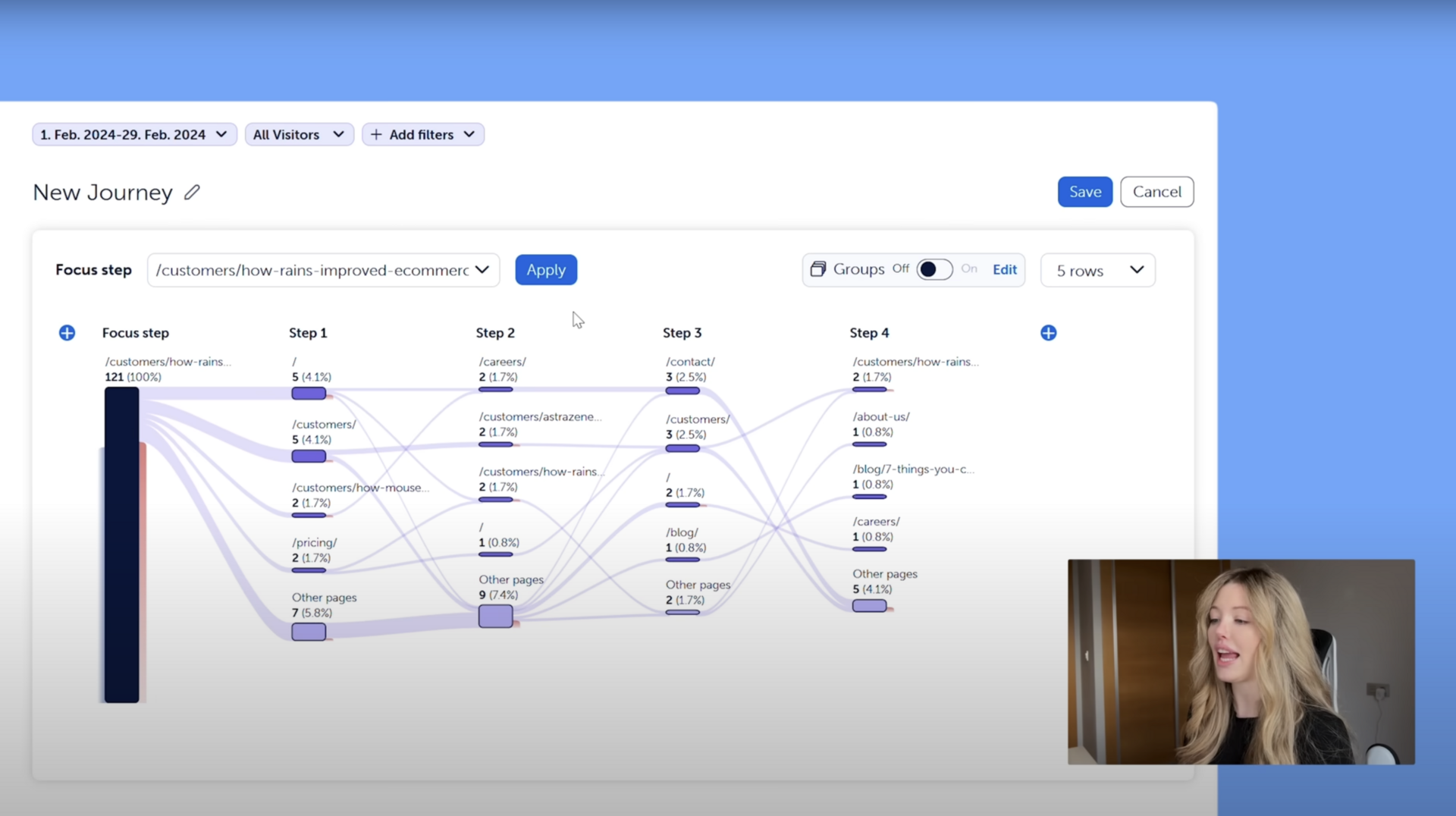

How to design PLG onboarding for an outcome-based support AI like Intercom Fin?

What exactly happens in the first 1 minute, 10 minutes, 1 hour, 24 hours, and 7 days that gets an admin from “curious” to “confident,” and ready to pay for resolutions?

First 1 Minute: The Promise Is Crystal Clear

The admin lands on a signup page that doesn’t just sell “AI support.” It makes a very specific promise:

“Fin AI Agent: $0.99 per resolution—pay only for resolved customer conversations.” Source: Fin Pricing

The moment they create an account, they see progress towards the Fin framework.

Content → Test → Deploy → Analyze (Intercom calls this the Fin Flywheel)

Get access to a usage/resolutions indicator.

A clear starting action, “connect your knowledge source and let Fin learn from it.”

The most important activation psych is when admin understands the setup, the cost, and the action to personalize.

First 10 Minutes: Setting the Setup Moment with Connect Knowledge, Show Real Intelligence

The highest leverage activation action for an outcome-based AI agent is connecting a real knowledge source (ex, paste a help center URL, upload a PDF or policy doc, or connect an existing knowledge base).

Having the AI answer a sample of real questions using the customer’s own content is the WOW moment.

Don’t rely on sandbox data or examples. They can show how it works, but focus the user on setting up personalization and build trust on how the AI works for them, not with an example.

Seeing their content used intelligently is a deeper aha than any canned demo.

First 1 Hour: Build Trust Through Testing and Control

By the end of the first hour, admins should feel two things:

“This AI is actually pretty good with my content.”

“If it goes wrong, I know how to control and correct it.”

The product should guide them to use a test console to fire real or recent customer questions at the AI. Rate answers (good / needs improvement) and see why the AI responded the way it did. Add specific guidance (tone, business policy rules, escalation triggers handoff to a human).

When an answer is wrong, the path to improvement must be obvious:

“Here’s the article/section the AI used.”

“Edit this content or add a new answer template.”

“Re-test and see the updated response.”

In other words, hour one is about trust-building via visibility and control. Without this, one bad answer during a live deployment will kill adoption.

First 24 Hours: Ship the First Live Resolution

By the end of day one, the AI should have resolved at least one real customer question end-to-end with:

AI taking the first shot.

Optional human handover if uncertain.

A rating (CSAT or thumbs up/down) captured from the end user.

This means:

The admin enables the AI on a single, low-risk channel (e.g., web chat on a subset of pages or a “beta” queue).

Handover rules are conservative:

Confidence threshold below which humans take over.

Trigger phrases that always pull in a human.

The goal of day one is not to maximize automation; it’s to get one clean, fully visible, fully rated resolution through the system.

At this point, the credits meter moves. The admin has seen:

Which conversations counted as “resolved by AI.”

How ratings looked.

That there was a clear off-ramp to humans when needed.

Now the outcome-based pricing model finally “clicks.”

First 7 Days: Create a Resolution Learning Loop

Over the first week, activation is showing that AI performance improves with use.

The product should lean into:

A simple resolution dashboard:

Number of conversations AI attempted.

Number it fully resolved.

Resolution rate, involvement rate, and satisfaction.

A content improvement queue:

List of conversations where AI struggled or was rated poorly.

Direct links to update or add content to fix these gaps.

Prompts to:

Add more knowledge sources.

Expand to a second channel (email, a different web surface).

Invite teammates (support managers, content owners) to contribute.

This closes the loop:

AI attempts resolutions.

Admins and users rate outcomes.

The system surfaces problem cases.

Admins fix content or rules.

Resolution rates improve, making every future credit feel more valuable.

At that point, credits start feeling like a lever, not a gamble.

Reviewing the Mechanisms: Onboarding Bumpers and PQLs

Finding the right free credit limits for the buyer to train and think in terms of outcome before they commit to purchasing.

Previously, when I was selling AI-answered security questionnaires, we gave new customers a small batch of free AI credits. This allowed them to sample with pre-filled data or upload a real security questionnaire and have the AI draft a response.

Customers experienced a bigger WOW moment when it was fully customized to their situation. Uploading their own security questionnaires and seeing the AI-generated answers helped them realize that the outcome was solving a real job that previously took weeks.

While the model was very usage-based, it's actually outcome-based because the AI-generated answers must pass audits and unblock sales deals for compliance. If they don't, customers won't want to use more credits in the future. That's why activation really focuses on trust, personalization, and refinement, not just hit "generate", and get results like a slot machine.

When an admin skips testing and gets the wrong answer in a live conversation, then receives a bill, they don't think, "I should have tested more." They think the AI doesn't work, and you don't get a second chance with AI products.

Making sure activation happens, that the admin is connecting to the right content, testing before deploying, and building refinements will reduce downstream churn. To achieve this, we need to implement product onboarding bumpers. These can remind the admin to connect to their help center to see real answers, or prompt them after a real test, ready to go live on chat, and then review results.

For example, a notification could say, "Your resolution rate improved 12% this week. Add more content to keep improving." The key is making sure the admin is engaging with the results, making refinements, and not doubting the effectiveness of the AI.

Why This Wins at Scale

Outcome-based PLG scales by making AI better, more resolutions, better content, and higher trust.

The vendor and customer share the same incentive. Intercom reinforces this with a $1M performance guarantee if Fin doesn't hit agreed resolution targets, which transforms the buyer's question from "what if it fails?" to "what happens when it succeeds?"

Prove outcomes before billing. Make every activation step build toward a live resolution. Focus on AI refinement and testing, and build trust with the customer.

If you’re building outcome-based AI, the audit question isn’t “is our pricing right?” It’s: where do admins stall in the first seven days, and what does the product do to push them to the first live outcome safely?

I write weekly about my learning on launching and leading PLG. Feel free to subscribe.

I am Gary Yau Chan. 3x Head of Growth. 2x Founder. Product Led Growth specialist. 26x hackathon winner. I write about #PLG and #BuildInPublic. Please follow me on LinkedIn, or read about what you can hire me for on my Notion page.

![PLG vs SLG: How to Choose? [Framework]](https://media.beehiiv.com/cdn-cgi/image/format=auto,fit=scale-down,onerror=redirect/uploads/asset/file/add0e243-a663-4f5b-81a5-0554cd609c17/image.png)

![LTV CAC Ratio Payback Formula [Free Download Template]](https://media.beehiiv.com/cdn-cgi/image/format=auto,fit=scale-down,onerror=redirect/uploads/asset/file/44a1862d-a0e4-478c-b87f-038cdd79daef/Screenshot_2024-09-18_at_3.44.16_PM.png)